The introduction of transformer architectures in 2017 marked a paradigm shift in natural language processing. Before transformers, recurrent neural networks and LSTMs dominated the NLP landscape, but they struggled with long-range dependencies and parallel processing. Transformers solved these fundamental limitations through a revolutionary mechanism called self-attention, enabling models to process entire sequences simultaneously while capturing complex relationships between words.

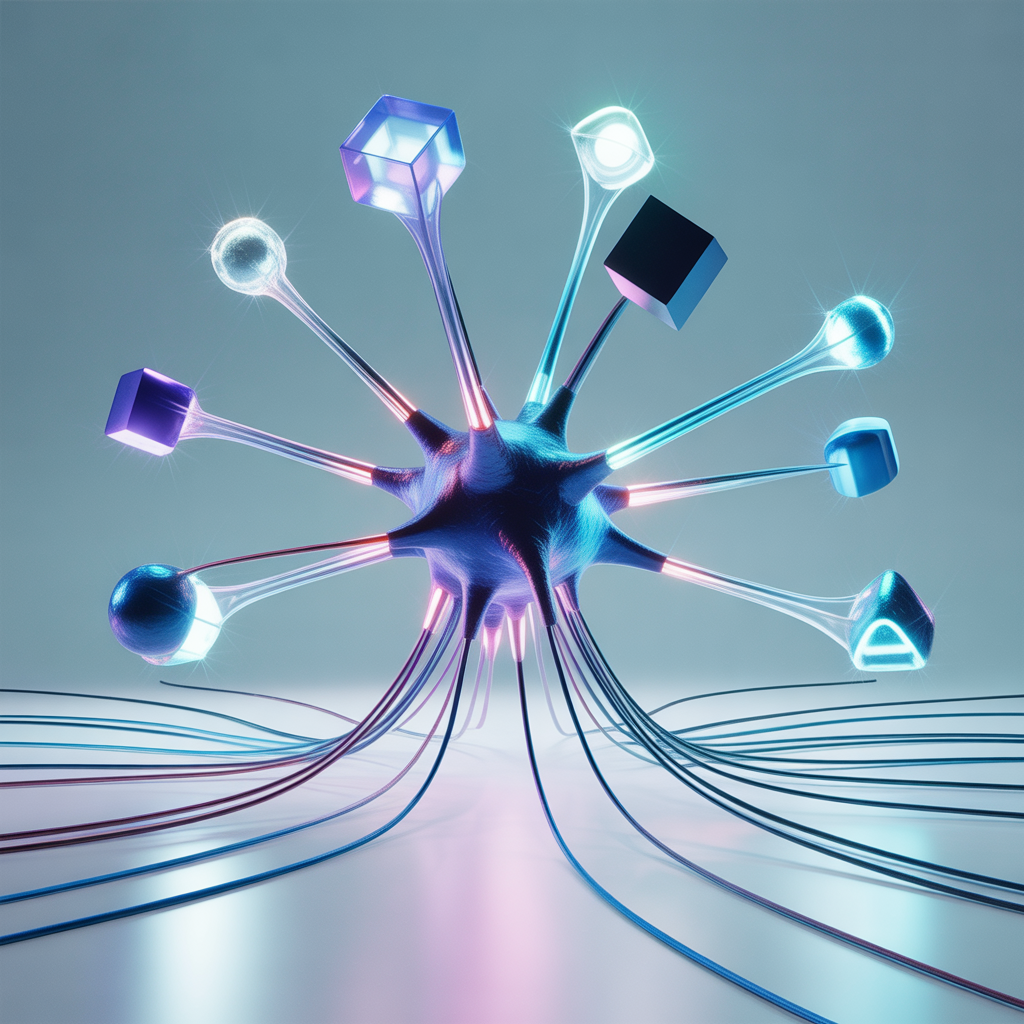

At the core of every transformer is the attention mechanism, which allows the model to dynamically focus on different parts of the input when processing each word. Unlike sequential models that process text one word at a time, transformers can examine all words in parallel and learn which words are most relevant to each other. This parallel processing capability not only accelerates training but also enables models to capture sophisticated linguistic patterns that were previously difficult to learn.

The Attention Mechanism Explained

Self-attention works by computing three vectors for each word in the input: query, key, and value vectors. The model then calculates attention scores by comparing each word's query vector against all other words' key vectors, determining how much focus to place on each word when encoding the current word. These scores are normalized and used to create a weighted sum of value vectors, producing a context-aware representation for each word.

Multi-head attention extends this concept by running multiple attention operations in parallel, allowing the model to attend to different aspects of the input simultaneously. One attention head might focus on syntactic relationships like subject-verb agreement, while another captures semantic similarities or positional patterns. By combining information from all heads, transformers build rich, multifaceted representations of text.

From BERT to GPT: Different Transformer Variants

BERT, introduced by Google in 2018, uses a bidirectional transformer encoder to create deep contextual word representations. By training on a masked language modeling task, BERT learns to predict missing words based on surrounding context from both directions. This bidirectional understanding makes BERT excellent for tasks requiring deep comprehension, such as question answering, named entity recognition, and sentiment analysis.

GPT models take a different approach, using a unidirectional transformer decoder architecture optimized for text generation. GPT is trained to predict the next word in a sequence, learning to generate coherent, contextually appropriate text. This autoregressive approach makes GPT particularly powerful for creative writing, code generation, conversation, and any task requiring fluent text production.

Practical Applications and Implementation

Implementing transformers in your applications has become increasingly accessible thanks to libraries like Hugging Face Transformers, which provides pre-trained models and simple APIs for common NLP tasks. For many use cases, you can leverage transfer learning by fine-tuning existing models on your specific domain data, achieving excellent performance with relatively small datasets and computational resources.

When choosing a transformer model, consider your specific requirements. BERT-style models excel at understanding and classifying text, making them ideal for sentiment analysis, content moderation, and information extraction. GPT-style models are better suited for generation tasks like chatbots, content creation, and code completion. Newer models like T5 and BART combine encoder and decoder components, offering flexibility for both understanding and generation tasks.

Optimization and Deployment Considerations

While transformers offer unprecedented performance, they can be computationally expensive, especially for real-time applications. Techniques like model distillation can compress large models into smaller variants that retain most of the performance while running much faster. DistilBERT, for example, achieves 97% of BERT's performance while being 40% smaller and 60% faster.

For production deployment, consider using optimized inference engines like ONNX Runtime or TensorRT, which can significantly accelerate transformer models through quantization, operator fusion, and hardware-specific optimizations. These optimizations make it feasible to run sophisticated transformer models in latency-sensitive applications and on resource-constrained devices.

The Future of Transformer Architectures

Transformer architectures continue to evolve rapidly, with recent innovations addressing efficiency, multimodal understanding, and longer context windows. Models like GPT-4 and Claude demonstrate that scaling transformers with more parameters and training data continues to unlock new capabilities. Meanwhile, efficient transformer variants like Linformer and Reformer reduce computational complexity, making transformers practical for even longer sequences.

Understanding transformer architectures is essential for anyone working with modern NLP. Whether you're building chatbots, analyzing customer feedback, or creating content generation systems, transformers provide the foundation for state-of-the-art performance. By mastering these architectures and staying current with new developments, you can build NLP applications that truly understand and generate human language at unprecedented levels of sophistication.